Testing Automation for OpenSearch Releases

OpenSearch releases many distributions across multiple platforms as part of a new version. These distributions are of two types - the default distribution that includes all plugins, and the min distribution without any plugins. They go through a rigorous testing process across multiple teams, before they are signed off as “release ready”. It includes unit testing, integration testing to validate the behavioral integrity, backward compatibility testing to ensure upgrade compatibility with previous versions, and stress testing to validate the performance characteristics. Once successfully tested, they are marked ready for the release.

The rigorous testing process provides good confidence in the quality of the release. However, so far it has been manual and non-standardized across plugins. Each plugin team validated their component by running tests on the distribution and provided their sign-off manually. With dozens of OpenSearch plugins released as part of default distribution, the turn around time for testing was high. Also, lack of a continuous integration and testing process lead to late bug discoveries which further added to release times. The Automated testing framework solves these problems by simplifying and standardizing the testing process across all components of a release distribution.

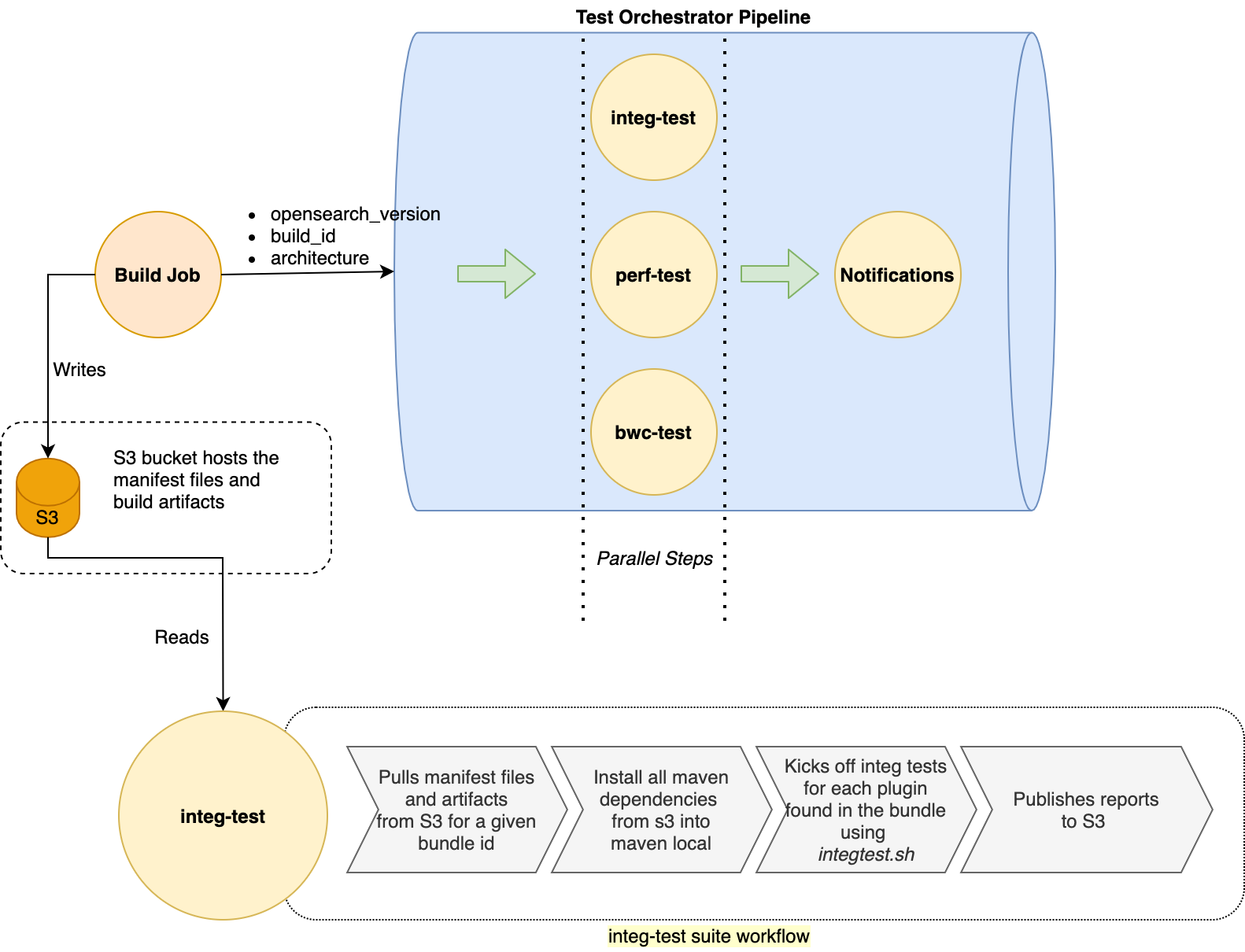

The way it works is once a new bundle is ready, the build-workflow (explained in this blog), kicks off the test-orchestrator-pipeline with input parameters that uniquely identify the bundle in S3. The test-orchestrator-pipeline is a Jenkins pipeline to orchestrate the test workflow, consisting of three test suites - integ-test (integration testing), bwc-test (backward compatibility testing), perf-test(performance testing), to run in parallel. Each of these test suites is a Jenkins pipeline that executes the respective test type.

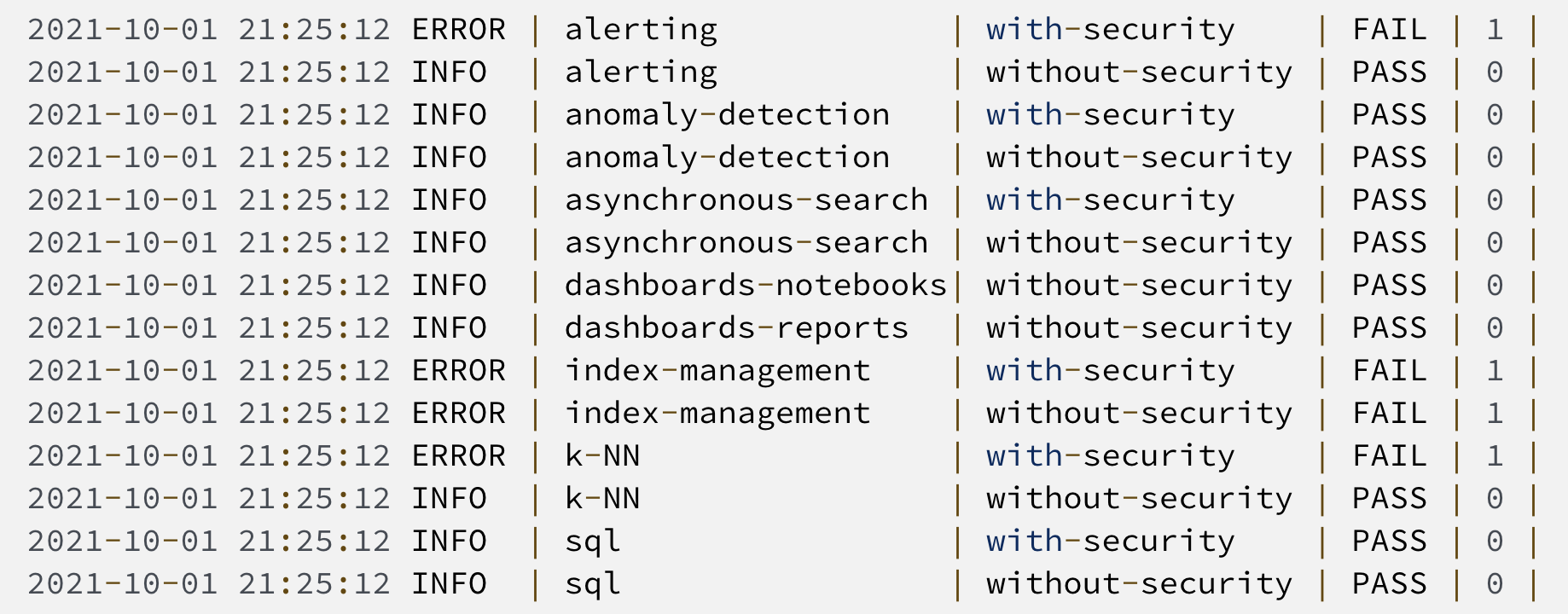

Like build-workflow, these test workflows are manifest-based workflows. integ-test suite reads bundle manifest file to identify the type and components of a bundle under test. It pulls all maven and build dependencies for running integration tests on the bundle from s3 (these dependencies are built as part of build-workflow and re-used for testing). After pulling the dependencies, it runs integration tests for each component in the distribution, based on the component test config defined in the test-manifest file. It spins a new dedicated local cluster to test each test config and tears down the cluster after the test run completes. The test and the cluster logs are published to S3 after the test workflow completes. bwc-test suite runs similar to integ-test suite, for backward compatibility tests. Currently, it only supports backward compatibility tests for OpenSearch and anomaly-detection plugin, but there’s ongoing effort to add more plugins. perf-test suite runs performance testing with rally tracks on a dedicated external cluster. This piece is currently in development. Once all test suites complete, the notifications job sends out notifications to all subscribed channels. Figure 1 illustrates how different components of the test workflow interact with each other. Figure 2 shows a sample test report generated by the integration testing workflow on a release candidate build.

Figure 1: Automated test workflow explained

Figure 2: A sample test reported generated by integ-test workflow. with-security config denotes the test run with security plugin enabled, without-security config denotes the test run without security plugin enabled.

This testing automation helps make the release process faster by providing a quick feedback loop to surface issues sooner. It also standardizes the testing process and enforces strict quality controls across all components. The code is entirely open source and development work is being tracked on this project board. We welcome your comments and contributions to make this system better and more useful for everyone.